|

I am working as a Deep Learning Engineer at Oracle, Bangalore.

My interest lies collectively in Natural Language Processing, Domain Adaptation, Deep Reinforcement Learning, Graph Neural Networks and Capsule Networks.

Email / LinkedIn / Resume / Google Scholar / Personal Blog |

|

|

|

|

|

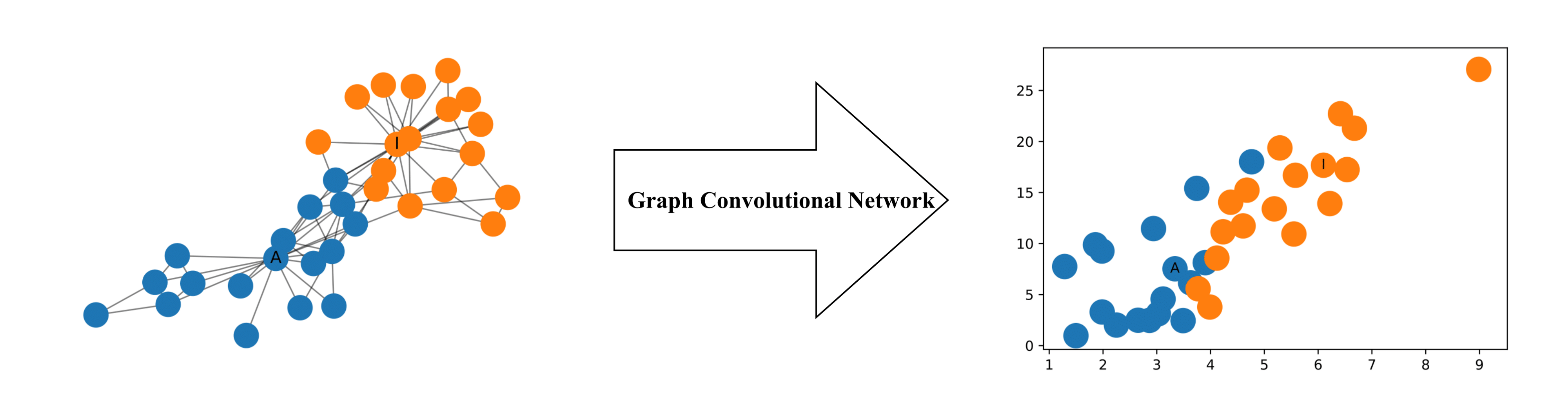

Explained the basics of graph neural networks followed by graph convolutional networks and using it for language understanding.Also, implemented a research paper based on gcn in Tensorflow Keras. |

|

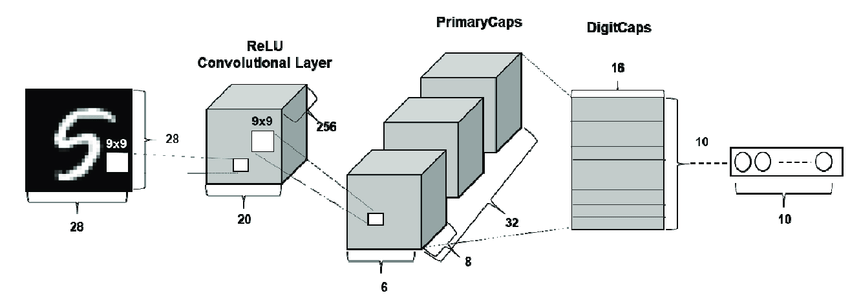

Explained the basics of capsule networks followed by the dynamic routing mechanism between the capsules and implemented a capsule network in Pytorch for image classification. |

|

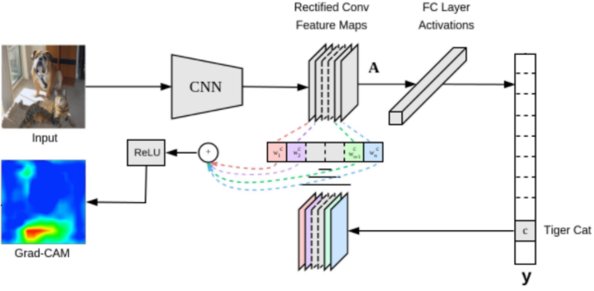

Explained and implemented the grad-cam in Pytorch for finding the class activation mappings for a given (image,class) pair. |

|

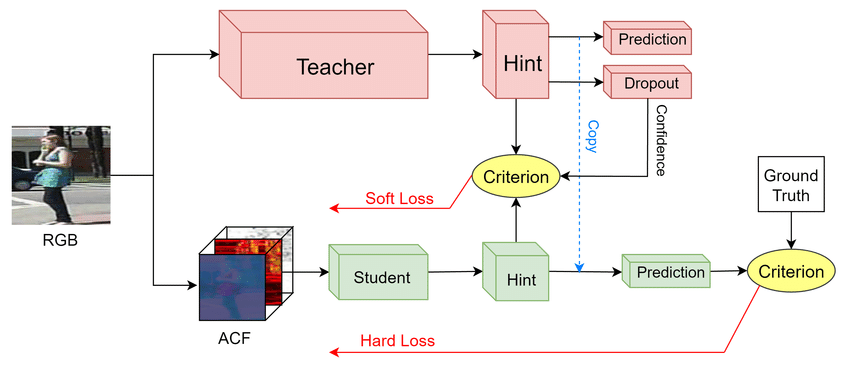

Tensorflow Keras implementation of a research paper based on continual learning which tries to solve the problem of catastrophic forgetting by pseudo labelling the new data. |

|

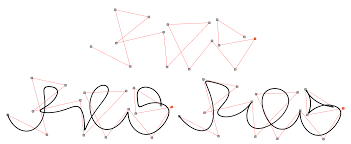

Implemented and succesfully trained a model in Tensorflow based on the research paper by DeepMind which uses LSTM layers followed by Mixed Density Networks to generate handwriting strokes. |

|

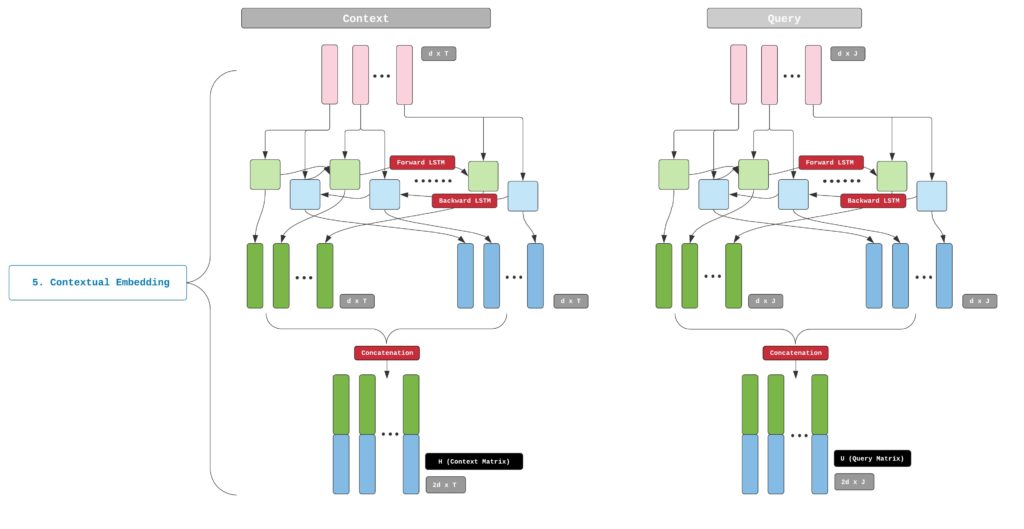

Implemented the research paper in Tensorflow which explains a mechanism to achieve fine representation of context and query by learning a common similarity matrix and a bidirectional attention mechanism i.e., Context2Query and Query2Context. |

|

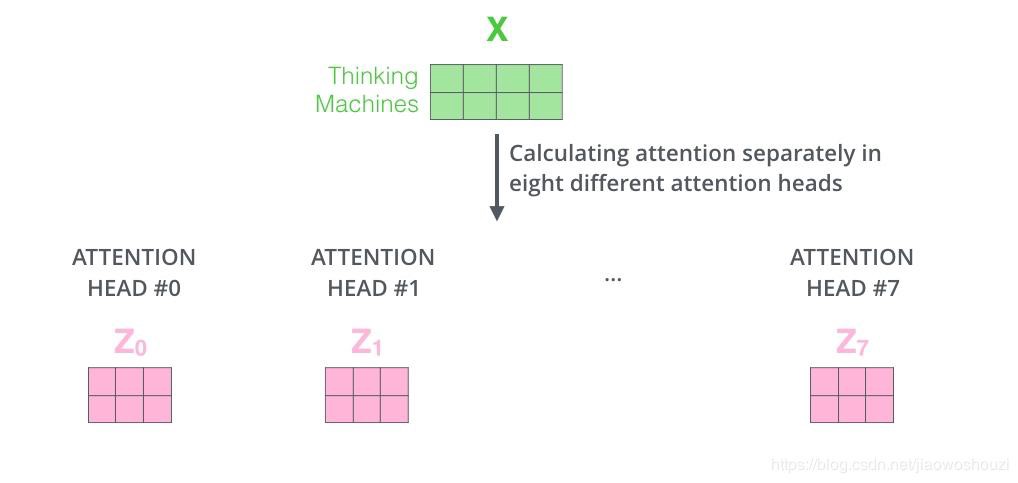

Studied and explained the research paper, Attention is all you need which proposes a new way to attending to the encoded information by the means of multiple heads. |

|

Explained and implemented the fundamental blocks of a bert transformer in Tensorflow Keras. |

|

|